User Experience Reviews

In user experience reviews, or heuristic reviews, researchers strive to experience the business firsthand in the same way customers do; then they do the same on competitors’ websites for comparison. Before we examine user experience reviews in detail, it is helpful to explore some guidelines on customer behavior that can help the researcher, or reviewer, make their customer experience as authentic as possible.

Online Customer Behavior

Customers generally display the following tendencies when interacting with an online business:

- Customers are goal-oriented. They’re looking for a place to click on the page or app that will take them to the specific product, service, or content that constitutes their objective.

- Customers scan pages quickly. They skip over details until they’ve found what they’re looking for. For example, they may click through a business’s homepage and several category and product pages rapidly, navigating with the help of images or keywords until they find a product they’re interested in; only then are they likely to read details such as the price, feature list, reviews, and shipping policy. They are very good at ignoring anything that looks like advertising, a tendency that is sometimes called banner blindness.

- Customers don’t act like professionals. They don’t think about whether the navigation is consistent, which page they’re on within the site, how individual page elements are working, what the business is trying to get them to do next, and so forth.

Experiential Business Review

Researchers first choose a realistic customer goal from the prepared list, and then follow a “customer scenario”—the actions a customer would take to pursue that objective. It is important here to step out of the professional mind-set and just experience the website as a customer would: If the reviewer can relate personally to the goal, all the better. For example, for a retail business the reviewers could go through the “browse and purchase” process for an item they’re interested in buying or would like to get as a birthday gift for a friend; for a travel business, the reviewers could look into booking their next trip.

Taking notes along the way, reviewers should strive to do anything customers would do—call customer support, abandon the site when unable to attain their goal easily, and so on. If many customers visit the site from mobile devices, reviewers should try going through the scenarios using a phone or tablet.

After experiencing one scenario, the reviewers retrace each step of the experience to critique it as a professional, noting any opportunities for improvement. Was it easy to accomplish the goal? Were some elements especially difficult to navigate? Were there steps that confused the reviewers? The reviewers then repeat this process for the remaining customer goals.

Experiential Competitive Review

Because customers are also visiting competitors’ websites, reviewers should do so, too. They follow the same procedure as before, exploring the same scenarios as customers, and then return to each step to critique the experience as professionals. To make the experience especially organic, reviewers may even start on their own business’s site and then abandon it for a competing site based on their natural browsing behavior or on analytics data that shows where customers tend to abandon.

Documenting Experiential Reviews

It’s important to document the heuristic reviews to share the findings and to prepare for the observation of real customer interactions. The documentation process should consist of screen shots showing the step-by-step interaction path, notes explaining the screen shots, and an updated list of qualitative questions based on the review. For example, researchers may ask whether customers also struggle or get confused where the reviewers did or whether users find it easy to navigate the same paths as the reviewers.

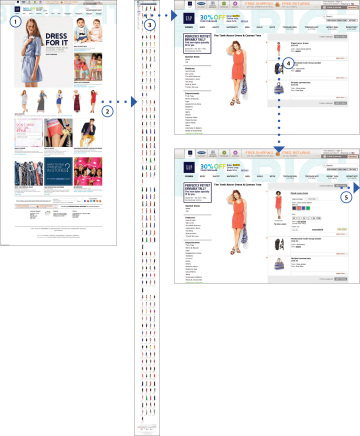

The following example illustrates how this process typically unfolds through a user experience review of the Gap, Inc. conducted in 2013. Figure 4.1 encapsulates the main steps of the experiential review.

Figure 4.1 It’s easy for customers to get confused or frustrated online. In this example of a user experience review, the customer would have abandoned the site after struggling to purchase the red dress she liked on the homepage.

The accompanying notes for the steps in the figure include:

- Reviewer visits Gap.com to look for a summer dress.

- Reviewer sees red dress in middle right that looks cute and clicks on it. Reviewer is not taken to the dress but to a page listing many dresses that don’t look like the one she clicked.

- Reviewer sees a similar dress in a different color on the second row and clicks on it. Reviewer decides to purchase it. She tries to change the color to red by clicking on the large image of the dress in the middle of the screen, but doing so simply shows a zoomed-in view of the dress.

- Just before abandoning, reviewer clicks on the small image of the dress in the middle of the screen.

- Reviewer now sees the color palette and realizes that the dress is no longer available in red. She decides to buy it in coral as pictured. She clicks on “add to bag” but nothing happens, so she leaves to shop at a competitor’s site. (The “add to bag” link failed because she first needed to select a size; there was no error message explaining this.)

Upon further critiquing the experience, the following questions were generated for the observational stage of the research:

- Do customers also struggle to find the product page for an item they liked on the homepage?

- Even though it was jarring to be taken to a page with hundreds of dresses after clicking on a single one, would it be a good customer experience to arrive on a page like that after clicking on the “Dresses” category in the Women’s section of the site?

- Do customers also struggle to figure out what colors an item comes in and how to choose a different color?

- Do customers also find it difficult to add an item to their cart?

- Do customers also try to buy before selecting a size and get confused when no message reminds them to pick a size or offers to help them do so?

Researchers seek to validate these questions by observing customers interacting with the site and by digging into the site analytics. Team members then create hypotheses, or proposed answers to these questions, and attempt to vet the hypotheses through test ideas, which are added to the Optimization Roadmap.

Don’t Act Too Quickly

The purpose of the user experience review is to gain insights into the customers’ experiences by stepping into their shoes for a moment. However, team members should resist making immediate changes to the site based solely on heuristic reviews unless clear technical errors are uncovered. Otherwise, the changes may reflect the perspective of online professionals, not the typical customer.

Conducting realistic user experience reviews is a skill that can be learned over time, but improvement requires an adequate feedback loop: Reviewers need to watch actual customers interact with the business and test new designs, not simply criticize the company’s site in a vacuum.

Researchers must also exercise caution when exploring competitors’ sites. All too often businesses assume a competitor’s designs are effective and simply copy them without finding out whether these designs would work for their own business. As a result, not only do they risk adopting ineffective designs, but their sites also wind up looking very similar to those of their competitors.

One way to avoid this pitfall is to review sites outside of the business’s circle of competitors when looking for new ideas; no matter where they find new concepts, companies must test them before pushing them live to all customers.