- Activity themes

- Activity types

- Creating an activity

- Best practices

- Summary

Activity types

Now that we walked through the common features applicable to all activity types, let’s dive into the various types available so you can see the value that each offers in terms of visitor management and the data each one offers. It is very important to understand the activities available in Adobe Target because they enable you to apply additional strategies to your optimization efforts.

A/B/n activities

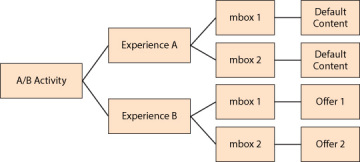

A/B/n is by far the most popular of the activity types, and as you might imagine, it is the activity type that allows you to compare two or more experiences. Here is the architecture of a standard A/B activity with two different offers assigned to two different mboxes.

Figure 4.2 Visual example of the components that make up an A/B activity.

As you can see, each experience comprises separate offers that are competing against each other. When a visitor qualifies or is randomly placed in Experience B, that visitor will see both Offer 1 and Offer 2 when the visitor visits the page where mbox 1 or mbox 2 are placed. The mboxes can be on different webpages, so if a visitor sees only mbox 1, he will be presented with only Offer 1. Also worth noting is that each experience uses the same mboxes, which ensures you are comparing apples to apples in your reports. Adobe Target manages which experience a visitor sees based on the rules or the activity structure that you create.

An important detail to note regarding the A/B/n activity is that whichever experience or branch of the activity the visitor is part of, they remain in that experience for the life of the activity. That is, if they continue to visit the area that is being tested, they will continue to see that test content until they convert in the conversion event that you set up.

Multivariate, or MVT, activities

Multivariate testing (MVT) is a somewhat controversial topic in the testing world, with many schools of thought and much debate about whether it is as beneficial as A/B testing. For this book, we’ll set that controversy aside and examine how Adobe Target approaches MVT testing.

The default or productized MVT approach in the Adobe Target platform is the Taguchi approach—a partial factorial methodology in which only a portion of the possible combinations of elements and alternatives are delivered to the site and full results are extrapolated from the experiment. The key benefit Adobe Target advocates is that less time is needed to get results because fewer experiences require less traffic to test.

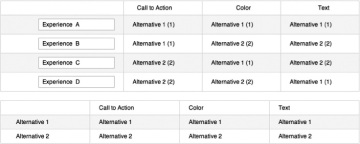

Here is an example of the Taguchi approach: Let’s say you have three elements, or pieces of content, that you wish to test, with two alternatives for each element. The elements are the call to action, the color, and the text. If you had two iterations of each of these elements, that would represent a 3X2 MVT design. If you mixed and matched each element and each alternative, all possible combinations would total eight (2^3 = 8). By applying the Taguchi approach, only four of those eight combinations will be tested as chosen using the Taguchi model. Here is an example (Figure 4.3) of a test design created by Adobe Target with a 3X2 MVT.

Figure 4.3 A sample multivariate test design created by Adobe Target

The Taguchi approach becomes especially handy when you have more than three elements. In the previous example, testing eight experiences rather than four wouldn’t present as much of a challenge as testing seven elements, each with two alternatives. A 7X2 MVT with all possible combinations would require testing 128 experiences (2^7) versus the Taguchi approach, which compares only eight experiences.

The reporting of an Adobe Target MVT test is very similar to what you could expect from any other type of test technique but with one exception. For MVT tests, Adobe Target provides an Element Contribution report that has two primary benefits.

Adobe Target collects the results from running the specified experiences and predicts which combination of options will offer the best result, even if that combination was not delivered to visitors. That best combination is called the Predicted Best Experience.

The first primary benefit is that when you test a subset of all possible test combinations, you receive data on only those tested experiences. This report presents a Predicted Best Experience based on the data collected thus far. With a 7X2 MVT Taguchi test design, you are testing only eight of the 128 possible experiences. This report indicates which experience would have been the best given that 120 experiences were not presented to visitors because only 8 experiences were part of the test design. The predicted best experience is determined using data collected from only the test experiences that were actually presented to visitors of the activity.

The other benefit to this report helps you understand how each element of an experience impacts the given success event. This data is incredibly helpful because you can use it for other test designs. For example, I have seen many Taguchi MVT Element Contribution reports infer that a certain message approach was incredibly impactful with high statistical confidence. That message concept can be incorporated into A/B tests or even offline marketing efforts. The report identifies themes that can be incorporated into other marketing efforts as well.

Here below in Figure 4.4, is an example of an Element Contribution report in which you can see each element and the alternative of that element that was most successful, thereby identifying what would be the best test experience even if it wasn’t part of the test design. Additionally, you can see that the most influential element was the Submit button.

Figure 4.4 Example of the Element Contribution report as seen in Adobe Target.

Although Adobe Target’s native approach to MVT is the Taguchi approach, you aren’t limited to running partial factorial MVT tests. I have worked with many clients who use Adobe Target for full factorial MVT tests. To do this, you simply create your test design offline and set it up as an A/B activity within Adobe Target. The post-activity data is then analyzed offline as well to quantify interaction effects.

1:1 activity

The 1:1 activity is an activity type that is available only to those customers who have a 1:1 license with their Adobe Target contract.

The 1:1 activity leverages machine learning models to determine the right content to present to an individual based on how similar individuals have responded to the same content. These models focus on a single success event that you specify in the activity setup. These events may be anything that can happen in a session, such as click-through, form complete, purchase, revenue per visitor, and so on.

This type of test will have two groups, similar to an A/B test. The first branch serves as a control and is presented to 10 percent of the traffic. These visitors will randomly see any one of the offers that you are using in the test. The engine evaluates this 10 percent of the traffic by observing how visitors react to the content and then correlates that reaction to the profile attributes of those visitors.

This learning is then applied to the other 90 percent of the traffic, so they see the best content based on everything Target knows about that visitor and how similar they are to visitors who responded earlier in the activity.

I have seen this activity type offer a ton of value to customers in highly trafficked pages, such as a home page or main landing pages. The main benefit here is the automation. You set it up and let it do its thing with minor tweaking here and there.

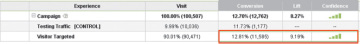

Below in Figure 4.5 is what a Summary report looks like in the 1:1 activity type. It shows the two branches of the test.

Figure 4.5 1:1 Summary report example as seen within Adobe Target

The other key value that this test type provides is an Insights report. Yes, Adobe has an Insight product for analytics and also a report in 1:1 called Insights. This report in 1:1 provides data on which profile attributes of visitors represent a positive and negative propensity against a given offer. In other words, this report discovers segments or profile attributes that are impactful, such as discovering that people on their third session who are from California respond positively to a particular offer. The Insights report discovers such segments for you and provides a marketing insight that can be used in other tests or in offline marketing.

1:1 activity display

The 1:1 activity display type is the same as the 1:1 activity type except it is used in offsite display ads instead of a website.

Landing page test/landing page activity

The landing page test/landing page activity type of test is very different from an A/B test in that visitors can switch branches or experiences of a test.

Both the landing page test and the landing page activity types allow visitors to switch experiences. The key difference between these two types is that a landing page test is used for MVT testing, whereas the activity strategy involves having the visitors change experiences as part of an MVT test.

This technique is highly effective when your test strategy requires that visitors be able to change branches of a test, compared to the A/B approach in which visitors maintain membership in a particular test branch for the life of the test.

A great example of such a test is based around SEM (search engine marketing) reinforcement. Let’s say you have two ad activities taking place on Google. One ad activity is promoting a particular product, and the other is promoting discount messaging. If you have a test running that is quantifying the value of message reinforcement associated with source, you would have an A, B, C activity. Experience A would be the default content or what is currently running on the landing page. Experience B would be targeted to the first Google SEM messaging on product messaging, and Experience C would be targeted to the second Google SEM ad on discount messaging.

To effectively run this type of scenario, you would want to leverage the landing page activity in the event that visitors happen to click through on both of the SEM ads. If you used an A/B activity, a user who clicked on the first ad and then returned to Google and clicked on the second ad would always see the site experience tailored for the first ad. In contrast, the landing page activity would recognize the ad clicked on and switch the user to the corresponding experience.

Monitoring activity

The monitoring activity is typically used to collect data before other tests are run, or to track visitor behavior across activities. The monitoring activity does not typically display content, although it can if needed. It automatically has a lower priority than all other activities, so it displays content only when no other activities are running in the same mbox(es).

A great use case for a monitoring activity is to set a baseline for conversion rates or revenue metrics, such as total sales, revenue per visitor, or average order value. I often recommend that if customers have the mboxes on their site but alternative content isn’t ready, starting a monitoring activity is a good practice to not only see some metrics but also become familiar with the platform. You can also run a monitoring activity to track success through a flow or on a page while a series of tests are run. That way you can track the improvement of a particular metric over time and run multiple tests along the way.

The monitoring activity was not designed to replace an organization’s analytics, but many organizations use the monitoring activity to provide data on behaviors defined in Adobe Target or to augment analytics with pathing reports.

Another interesting use of a monitoring activity is using it to deploy tags to the site independent of Adobe Target. Before tag management solutions became so popular, the mbox was a nice, easy way to get code to the page (if an mbox was already in place) without involving IT. Nowadays, Adobe Target has a plug-in capability that can handle getting code to a page without setting up a monitoring activity.

Optimizing activity

The optimizing type of activity technique is (surprisingly) unique to the Adobe Target platform, particularly considering that it can be very helpful to any optimization team within an organization.

The optimizing activity is not really designed for Adobe Target users to learn which activity experience is best, although it can provide that information. Rather, an optimizing activity is more about automation.

Imagine if you will, five pieces of content for testing. This content can be home page hero content, navigational elements, calls to action, email content.... really anything that you want to evaluate as part of a test design. Typically, you would employ an A/B activity technique or a multivariate activity to see which version leads to increases in success events. The optimizing activity test technique doesn’t maintain an equal distribution of test content, but will automatically direct traffic to the best performing experience. If Experience C was consistently outperforming the other experiences, the optimizing activity will automatically direct more visitor traffic to that test experience.

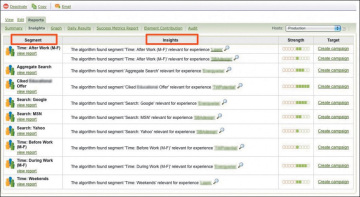

Adobe Target takes the optimizing activity to another level in the way it leverages segments in this automation. If you include segments in this activity setup, the optimizing activity will automatically present the most effective experience for each segment. Additionally, the Insights report available for this activity type shows which segments impacted which test offers and whether the impact was positive or negative. This is incredibly powerful because the tool is doing the discovery for you and you are enabled to create a new campaign targeted to that discovered segment.

In Figure 4.6 you can see a sample of what you can expect to see from this Insights report.

Figure 4.6 Same Insights report available within the Reports section of an optimizing activity

Optimizing activity test technique is most effective for tests that are run in email campaigns. Let’s say you have an email blast going to 200,000 email subscribers and you are running an A/B/C test of content within that email. The optimizing activity has the potential to show which experience within that test design was the most successful based on the first sets of visitors that opened that email. If, for example, the first 2,000 visitors reacted much more favorably to Experience B, the optimizing activity would shift more and more visitors to receive Experience B. This approach allows organizations to immediately capitalize on test results for short marketing cycles such as those in email campaigns.

Activity priorities

Now that you know how Adobe activities work and understand when to use an A/B/n activity compared to, for example, a landing page activity, it is important that you understand another topic that is crucial to activities: activity priorities.

Activity priorities could have been discussed in the context of common themes, except that this component of an activity requires some special, additional attention and doesn’t apply to all activity types.

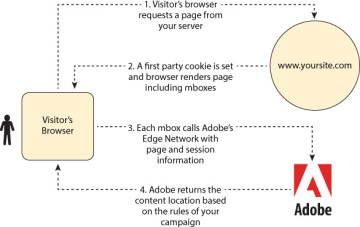

Let’s briefly review what happens when an mbox makes a call to Adobe servers. When a browser, mobile device, or mobile app sees an mbox, a call is made to Adobe Target Global Edge Network via an mbox. This mbox passes key information, such as the mbox name, the URL of the page, any data passed to the mbox, and the unique visitor ID that Adobe manages. Figure 4.7 illustrates what happens when an mbox call is made.

Figure 4.7 Step-by-step data flow when an mbox call is made to Adobe servers

When Adobe sees this mbox name, it evaluates whether that mbox is being used in a test, and if so, decides if this visitor becomes a member of the test. However, this gets challenging when multiple tests use the same mbox. Adobe has multiple ways to address this scenario, but the common method is to use activity priorities, which may be set to high, medium, or low.

When Adobe sees this mbox call, it will evaluate activity membership from high to low. This functionality allows you to be more strategic with your optimization program. A great example of activity priorities in action is when you have an activity targeted to a specific audience while another activity is open for everyone else. That is, you might have an activity targeted to known customers sharing the page with another activity that is open to everyone. The Google activity could have a high priority, whereas the other activity might have medium or low priority. Adobe will first evaluate if the visitor is a known customer and whether she should be placed in the higher priority activity. Of course, because the monitoring activity has a lower activity status, it will be prioritized last.